Some Massachusetts cities and towns have pre-crime panels that collect and share confidential data in the name of community safety.

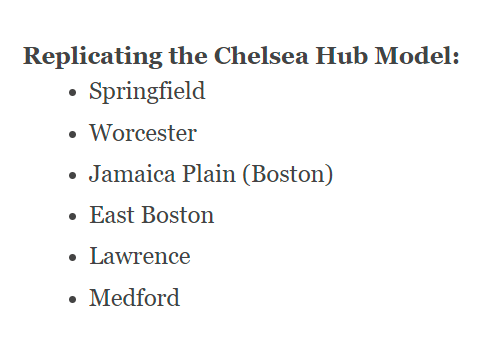

An international policing initiative has been implemented in cities across Massachusetts for several years. The Hub and COR (Center of Responsibility) program was first adopted by the town of Chelsea, MA, in 2015[i]. That same year, leaders from the City of Springfield attended a presentation hosted by the city of Chelsea and given by Dale McFee, the director of the Hub and COR program in Prince Albert, Saskatchewan, Canada[ii]. In 2018, Springfield was awarded $25,000 in state seed-funding to implement the Hub and COR model[iii]. Several other cities, towns, and regions in Massachusetts have created community policing programs based on this model:

What is Hub And COR?

Hub and COR is a community policing program first introduced in North America in Canada. It is modeled after a similar program in Glasgow, Scotland[iv], making it a truly international method of policing.

The program uses data-driven models to identify potential acute-risk individuals whose cases are discussed at regular meetings among multiple local agencies. These agencies include, but are not limited to, healthcare institutions, social services, mental health services, public schools, and law enforcement. There is a 4-step framework called a Four Filter Process, which is used to help protect a subject’s privacy, only revealing personally identifiable information if the case gets moved to the next phase, where it is necessary to address the situation. If the person is deemed sufficiently at risk, representatives from the involved agencies work together to intervene, sometimes immediately.

Below is a screenshot from the Plymouth County Hub website that lists some risk factors considered and discussed between agencies to determine whether an intervention is warranted. Also included is a list of Community Partners who would be involved in the sharing of information:

From a 2017 Vice article:

“But if Hub members agree that if a person or family displays a sufficient number of risk factors—such as drinking alcohol, missing school, or exhibiting “negative behavior,” an ill-defined term—the person’s information is shared with other agencies and an intervention is planned. This can include an unannounced visit to the person’s home, contacting family members and friends, or other steps determined by the agencies”[v]

Concerns

Privacy is the number one concern. Personal data is collected by every public agency, healthcare facility, website, and app. Although the program claims to protect privacy after a subject has been identified, the fact that all of these agencies are collecting and sharing information and collaborating in the first place should alarm everyone. Most likely, the people involved in these meetings have good intentions. However, multi-organizational systems like these are breeding grounds for abuse.

More from the Vice article:

“”One of the things being used to identify risk of suicide or depression is the posting of ’emo’ lyrics [online],” said Steeves. She also noted the rise of companies that train school staff how to surveil students on social media to identify risk factors.”[vi]

Abuse of information could easily happen, given the history of our public offices using our personal information against us. It wasn’t too long ago that people were threatened by the authorities to get vaccinated or risk losing their jobs. People who didn’t wear masks in stores were threatened with legal action, tagged as a threat to society, and even called terrorists. Using personal data already in the system, especially mental health data, could provide fuel to red-flag gun laws. The professionals on the Hub and COR panels have the power to use data to create scenarios that justify their existence and, therefore, justify their jobs.

This is not to say that the decision-makers would intentionally set the innocent up for an intervention. However, many of these people genuinely believe they are doing what is best for society by addressing pre-crime behavior. Whatever framework they create—or are given by higher-up agencies—could be used against anyone.

Consider middle and high school guidance offices where counselors are inundated with hormonal, emotional teenagers every day. All of the personal information the school has on these vulnerable kids is available for use at Hub meetings.

Regardless of how successful the Hub and COR initiatives are at stemming crime, anyone who has ever been yelled at for not wearing a mask, not getting vaccinated, or given the side eye for wearing a MAGA hat, or not using correct pronouns should be appropriately skeptical of the power these agencies wield while holding people’s confidential data in their hands.

Is your city or town on the list?

by Jana K.

[i] https://shelterforce.org/2019/07/17/connecting-the-citys-social-services-to-help-at-risk-populations/

[ii] https://chelsearecord.com/2015/03/12/chelsea-police-department-to-co-host-training-on-innovative-hub-cor-public-safety-model/

[iii] https://www.springfield-ma.gov/cos/news-story?tx_news_pi1%5Baction%5D=detail&tx_news_pi1%5Bcontroller%5D=News&tx_news_pi1%5Bnews%5D=13847&cHash=b3f681dbebfcacd5601289c33c75e5f7

[iv] McFee, D. R., & Norman E. Taylor. (2014). The Prince Albert Hub and the emergence of collaborative and risk-driven community safety. Ottowa; Canadian Police College. [link to file below]

[v] https://www.vice.com/en/article/mg7w4x/canada-hub-and-cor-policing-privacy-police

[vi] https://www.vice.com/en/article/mg7w4x/canada-hub-and-cor-policing-privacy-police

Leave a comment